Data bundle containing the images used in this exercise is available for download here. Jupyter notebook is here. Images were grabbed from Google image search. The dataset therefore realitically includes noise, which we all encounter in practical problems. The neural networks are expected to be able to handle reasonable amounts of noise.

from fastai import *

from fastai.vision import *

np.random.seed(77)

%matplotlib inline

path = Path('/home/jupyter/tutorials/data/equine3')

classes = ['mule','horse','donkey']

for c in classes:

print(c)

file = f'urls_{c}.txt'

print(file)

download_images(path/file, path/c, max_pics=200)

verify_images(path/c, delete=True, max_size=500)

def learntis(resnet, sz, bs):

data = ImageDataBunch.from_folder(path, train=".", valid_pct=0.2,

ds_tfms=get_transforms(), size=sz, bs=bs, num_workers=4).normalize(imagenet_stats)

cat = []

for i in range(len(data.train_ds)):

cat.append(f'{data.train_ds.y[i]}'.split()[0])

cat = np.asarray(cat)

for i in range(data.c):

print(len(np.where(cat==data.classes[i])[0]), data.classes[i])

learn = create_cnn(data, resnet, metrics=error_rate)

learn.fit_one_cycle(4)

learn.unfreeze()

learn.lr_find()

learn.recorder.plot()

learn.fit_one_cycle(2, max_lr=slice(3e-5,3e-4))

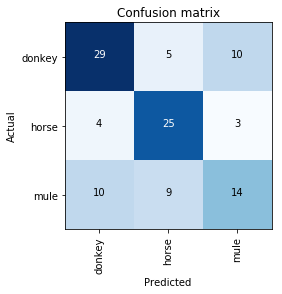

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

wrongs = 0

for t in interp.most_confused():

wrongs += t[2]

wrongs = int(wrongs)

print(wrongs, 'wrong')

interp.plot_top_losses(wrongs, figsize=(15,11))learntis(models.resnet18, 100, 128)

We get:

41 wrong

learntis(models.resnet34, 224, 64)

We get:

27 wrong

learntis(models.resnet50, 299, 32)

And we get:

24 wrong